Meta Releases SAM 2 and What It Means for Developers Building Multi-Modal AI

Introduction

Meta’s release of Segment Anything Model for videos and images (SAM 2) represents a significant leap in AI capabilities, introducing a unified model for real-time, promptable object segmentation in both images and videos. This advancement is poised to revolutionize how developers approach multi-modal AI systems, integrating visual and textual data more seamlessly than ever before.

Overview

The original Meta Segment Anything Model (SAM) laid the groundwork for versatile object segmentation in static images. SAM 2 builds on this by extending its capabilities to video content, allowing for real-time segmentation and tracking across both images and videos with impressive accuracy. This unified model simplifies the previously complex task of video segmentation, offering a more cohesive solution for handling diverse visual data.

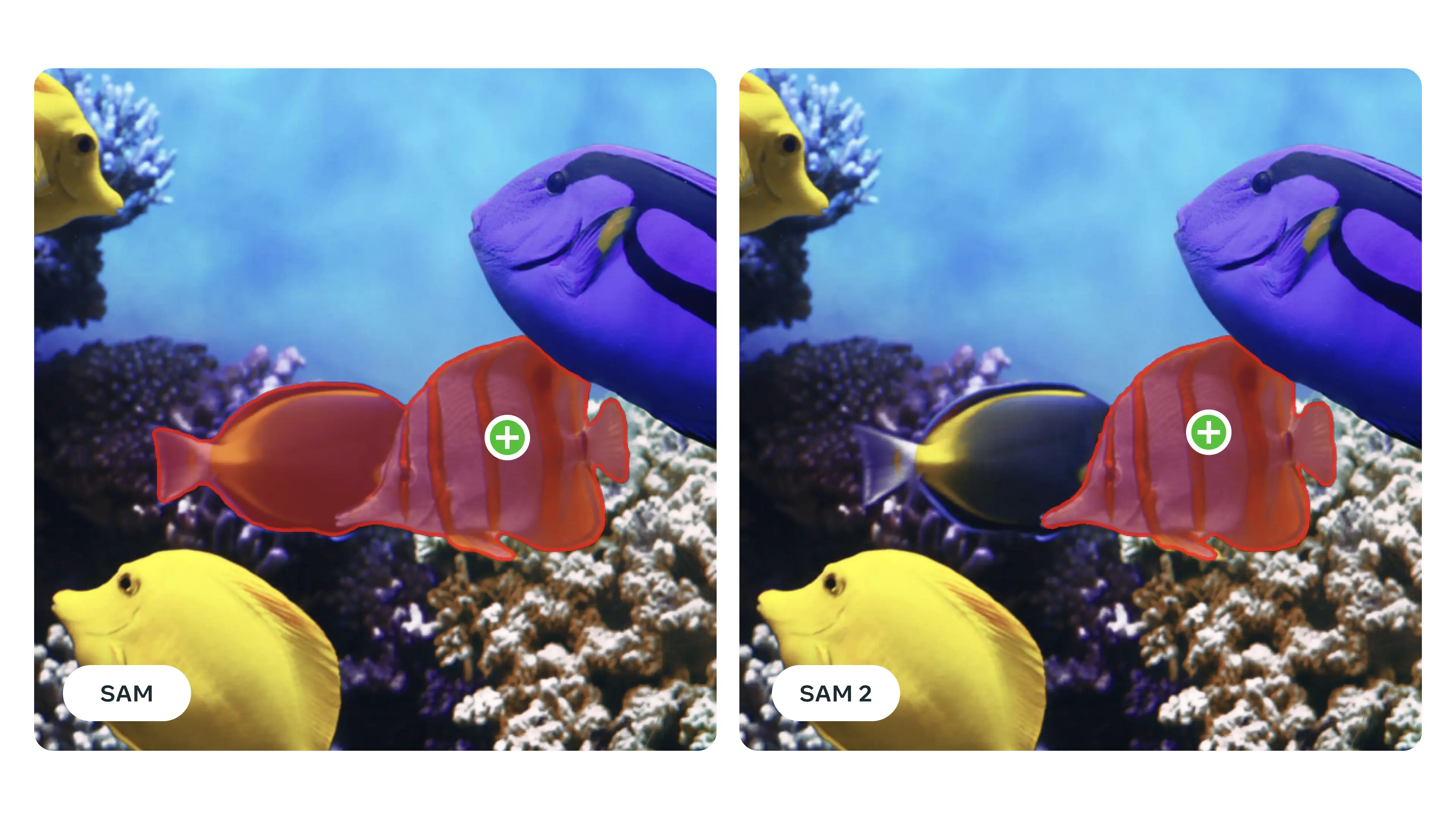

Comparing SAM and SAM 2

Meta’s SAM and SAM 2 represent two significant milestones in the evolution of object segmentation models, each advancing the field in unique ways.

Image Source: Segment Anything 2 | Meta

Image Source: Segment Anything 2 | Meta

| Feature | SAM | SAM 2 |

|---|---|---|

| Release | Initial release for image segmentation | Next-generation model with enhanced capabilities |

| Segmentation Type | Static image segmentation | Real-time segmentation for both images and videos |

| Interaction | Interactive prompts for segmenting objects in images | Unified model with improved interaction for videos |

| Object Tracking | Limited to static objects in images | Advanced memory mechanism for tracking objects across video frames |

| Accuracy | High accuracy for diverse image types | Improved accuracy with reduced interaction time for both images and videos |

| Applications | Effective for still image analysis | Suitable for both static and dynamic media applications |

| Temporal Consistency | Not applicable (image-only) | Enhanced with real-time tracking and segmentation across frames |

Practical Applications

Multimodal AI

SAM 2 can be combined with text-based LLMs to create more robust multi-modal applications. Helicone, currently the only observability tool supporting both text and image-based LLMs, is inspired by SAM 2’s capabilities and is moving towards enhanced multi-modal support, including integration with models like GPT-4 Vision and Claude 3.

Annotation and data preparation

SAM 2 accelerates the process of data labeling by providing precise and efficient object segmentation, reducing the time and effort required for annotation. This capability is especially valuable for training AI models that rely on large, accurately labeled datasets.

User interfaces and AR/VR

By integrating SAM 2’s segmentation features, developers can enhance user interfaces and create more immersive augmented reality (AR) and virtual reality (VR) experiences. The ability to segment objects in real-time opens up new possibilities for interactive and contextually aware applications in these spaces.

Creative and scientific uses

SAM 2’s advanced segmentation can be applied to creative projects, such as generating video effects or enhancing visual content creation, as well as scientific research, including tracking and analyzing moving objects in complex datasets. This versatility supports a wide range of innovative applications across industries.

SAM 2 to Helicone: Envisioning the Future of Multi-Modal AI Monitoring

Meta’s SAM 2 marks a transformative step forward in multi-modal AI, offering developers powerful tools for integrating visual data with textual information. As Helicone evolves to support multi-modal systems, including those utilizing SAM 2’s capabilities, the landscape of AI development is set to become more interconnected and insightful.